Certainly, Markov Random Fields (MRFs) are a fundamental concept in the field of machine learning and computer vision, and they play a crucial role in addressing the context-dependent nature of information processing tasks like object recognition. Let me provide you with some basic concepts, motivation, and a bit of history related to MRFs:

Basic Concepts:

- Random Variables: In probability theory, a random variable is a variable whose values are subject to variations due to chance. In the context of MRFs, random variables often represent certain characteristics or properties of the data being analyzed.

- Markov Property: The Markov property states that the future state of a system depends only on its current state, not on how it arrived at that state. In MRFs, this property is used to model dependencies between neighboring data points.

- Graphs: MRFs are often represented using graphical models, where nodes in the graph represent random variables, and edges represent probabilistic dependencies between them. These graphs are often referred to as Markov Random Fields.

- Potential Functions: MRFs use potential functions (also known as energy functions or cost functions) associated with nodes or cliques (groups of connected nodes) in the graph. These functions capture the probability distributions and dependencies in the system.

Motivation:

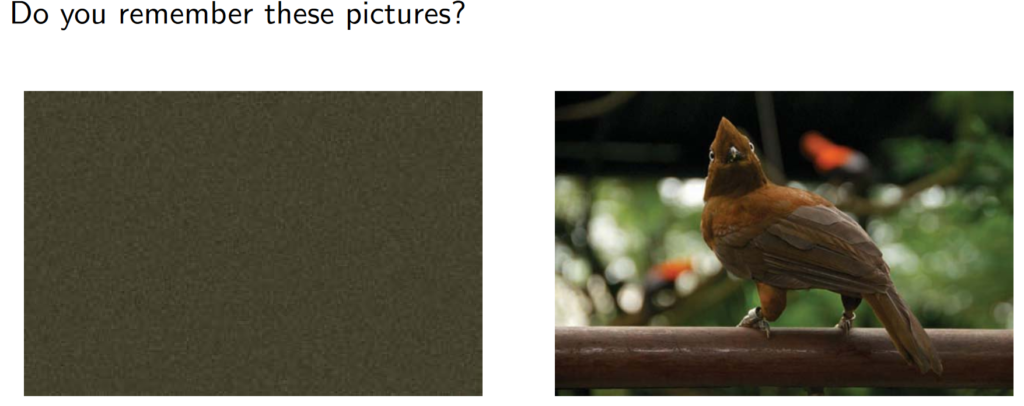

As you mentioned, most information processing tasks, including object recognition, are context-dependent. Consider object recognition in an image: the identity of an object in an image can depend on the surrounding objects, the texture of the background, lighting conditions, and more. Therefore, it’s essential to consider not just individual data points but also their relationships to make accurate inferences.

MRFs provide a mathematical framework for modeling and capturing these dependencies. By using the concept of Markov properties and graphical representations, they allow us to define probabilistic models that take into account the contextual information, both spatially and temporally, when making decisions or predictions.

History:

The history of Markov Random Fields dates back to the mid-20th century, primarily in the fields of statistical physics and probability theory. The concept was formalized and popularized in the context of computer vision and image analysis in the 1980s and 1990s.

In computer vision, MRFs became a crucial tool for tasks like image segmentation, object recognition, and stereo vision. They were used to model the relationships between pixels in an image and to incorporate contextual information for improved decision-making.

Over time, MRFs have found applications in various fields beyond computer vision, including natural language processing, bioinformatics, and social network analysis, among others.

In summary, Markov Random Fields are a powerful tool for modeling and solving context-dependent problems by capturing probabilistic dependencies in data through graphical representations. They have a rich history and continue to be a valuable resource in many areas of machine learning and data analysis.